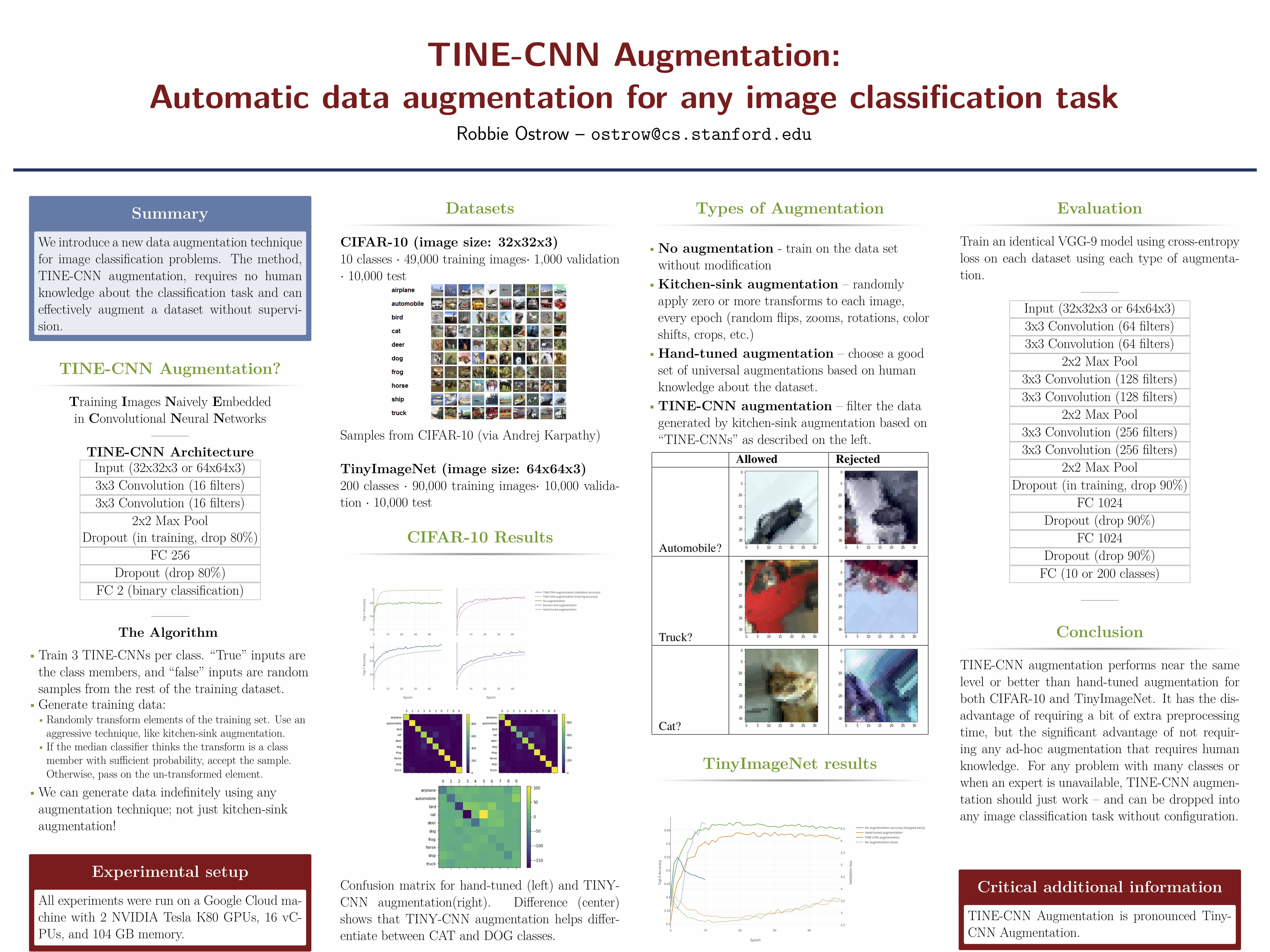

TINE-CNN Augmentation

TINE-CNN Augmentation (pronounced Tiny-CNN Augmentation) is a new way to perform automatic data augmentation for any image classification task.

Read the paper!

Why?

Neural networks need a great deal of data on which to train. On many tasks, this problem is partially mitigated by data augmentation and transfer learning. However, it’s not always clear what type of data augmentation is reasonable for a specialized dataset – for example, adding rotation into MNIST will result in confusing 6 and 9. In addition, deciding how to effectively augment a training dataset adds yet more hyperparameters to an already large model-design decision space. Augmentation is typically an ad-hoc process, and although there is recent work attempting to integrate it more naturally into the training process, there is no generic framework that researchers tend to use. Even so, data augmentation has long been in the toolbox of any neural-net designer and frameworks such as Keras often provide easy augmentation APIs. Unfortunately, these frameworks run into all of the above problems. Modern regularization techniques such as dropout can also help train CNNs on comparatively small datasets, but nothing replaces simply having more data with which to train.